Lp-WGAN: Using Lp-norm Normalization to Stabilize Wasserstein Generative Adversarial Networks

Published in Knowledge-Based Systems, 2018

Abstract:

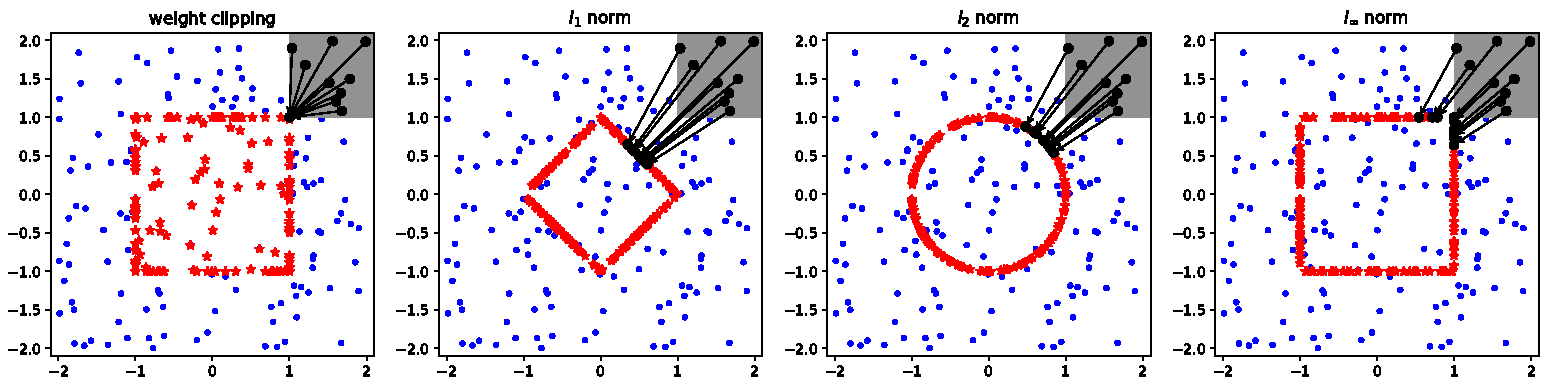

Wasserstein generative adversarial networks (Wasserstein GANs, WGAN) improve the performance of GANs significantly by imposing the Lipschitz constraints on the critic, which is implemented by weight clipping. In this work, we argue that weight clipping could result in a side effect called area collapse by modifying orientations of weights heavily. To fix this issue, a novel method called Lp-WGAN is presented, where lp-norm normalization is employed to impose the constraints. This method restricts the searching space of weights within a low-dimensional manifold and focuses on searching orientations of weights. Experiments on toy datasets show that Lp-WGAN could spread probability mass and find the underlying distribution earlier than WGAN with weight clipping. Results on the LSUN bedroom dataset and CIFAR-10 dataset show that the proposed method could stabilize training better, generate competitive images earlier and get higher evaluation scores.

Bibtex:

@article{ZHOU2018415,

title = "Lp-WGAN: Using Lp-norm normalization to stabilize Wasserstein generative adversarial networks",

journal = "Knowledge-Based Systems",

volume = "161",

issue = "2018",

pages = "415 -- 424",

year = "2018",

issn = "0950-7051",

doi = "https://doi.org/10.1016/j.knosys.2018.08.004",

url = "http://www.sciencedirect.com/science/article/pii/S0950705118304003",

author = "Changsheng Zhou and Jiangshe Zhang and Junmin Liu"

}